Better Measuring Safety Risk is Critical to AV Success

In a previous post, we presented a framework for discussing and regulating AV safety under which progress on understanding and communicating AV safety would fall into one of three categories:

Sociological: How should or would society like to balance the risks and rewards of new technology? What level of certainty is required around measuring safety risk?

Political: Who should decide how safe AVs should be, who measures this, and how is this enforced? The nature of AV technology might require an evolution of the current (and largely successful) automotive regulatory framework.

Scientific: What methods can usefully measure safety risk and compare it to a desired benchmark?

These are fairly distinct questions that have differing stakeholders and can be advanced independently. We will focus on the last category – namely, how do we measure the safety of an AV? Our findings tell us that we likely do not need to observe AVs for billions of miles to assure us that they are less likely to get into fatal crashes and that safety can be extrapolated from smaller data sets. This blog post will investigate when safety can be extrapolated from smaller data sets as well as set the stage for further analysis of other, more practical ways to measure AV safety.

Analyzing Risk

All travel involves safety risk, whether it is driving, flying, biking, or walking. Most people do not formally analyze the safety risk of a journey, but make a quick judgement as to whether the positive outcomes of a trip (commuting, shopping, etc.) justify its cost, including any perception of safety risk.

Being able to measure the safety risk of AV software would accelerate deployment and public acceptance. First, companies will need to make decisions about when to deploy AVs, where they should be deployed, and when the removal of safety drivers and truly driverless operation can be justified. Inevitably, and even if far safer than human drivers, AV-involved crashes will injure and kill people; at that point, manufacturers will likely need to demonstrate that they have met an appropriate standard of care. Finally, governments either have or are likely at some point to impose safety regulations for AVs and already do require some measurement of safety before approving applications to permit custom-built AVs. Better ways to more precisely measure and articulate the case that AVs are safe can help reach all of these outcomes.

Broadly speaking, there is a need for further technological development to assist both the private sector and governments in measuring the safety risk of AVs. This overlaps with, but is distinct from, the development of AV technology itself. The line is often blurry – for example, simulation is used as a tool for developing AV capabilities and also can be used to validate AV safety performance under a range of scenarios. Several organizations have proposed a framework for AV validation that leans heavily on simulation, and a database of potential real-world scenarios to be tested in simulation is under construction. These proposals are promising as a potential component of a safety argument, but the need for further research into simulations and scenarios as well as greater standardization of AV technology means that there will be considerable time before a standardized safety case emerges.

Going Back to Basics

A number of advocates have argued that AVs should be evaluated just as humans are – with a road test. While it’s not clear that we should settle for the imperfect way that things have been done for human drivers, there is some intuitive logic to the basic idea of measuring AV safety by seeing if they can navigate public roads successfully, minimizing accidents or software failures. In fact, the state of California collects data on how many miles AVs drive, details on any accidents, and how frequently the self-driving software “disengages.”

However, experts have, at great length, criticized the California statistics as a poor gauge of AV safety, largely because of inconsistent definitions and legitimate reasons AV software might disengage that do not reflect poorly on safety. More fundamentally, the RAND Corporation argued in a landmark 2016 article, ”Driving to Safety”, that it is infeasible to benchmark AV safety against human performance by measuring how well AVs do in “field tests” because individual human drivers crash relatively rarely. Since human drivers actually crash relatively rarely (on a per-mile basis), you cannot tell whether a software “driver” is safe with a small sample size. Phil Koopman, a Professor at CMU, has fleshed this out by arguing that driving is full of situations that, while rare in absolute terms, occur on our roads every day. Therefore, we cannot extrapolate the safety of an AV from a “small” sample of tens of millions of miles or even more.

This post explores that assumption a little deeper, with the goal of thinking about how we might be able to use on-road testing to measure AV safety.

Minor crashes are predictive of major ones

We start by unpacking one of the core assumptions in RAND’s conclusion, namely, the suggestion that, to demonstrate the safety of an AV, the AV would need to accumulate enough miles to demonstrate that it gets into fewer fatal collisions than human drivers. This suggests than an AV successfully minimizing property damage crashes or injury crashes does not tell us whether AVs can avoid fatal crashes. As these are different types of crashes with different precipitating causes, avoiding fender-benders is therefore not related to avoiding catastrophic crashes. But is that true?

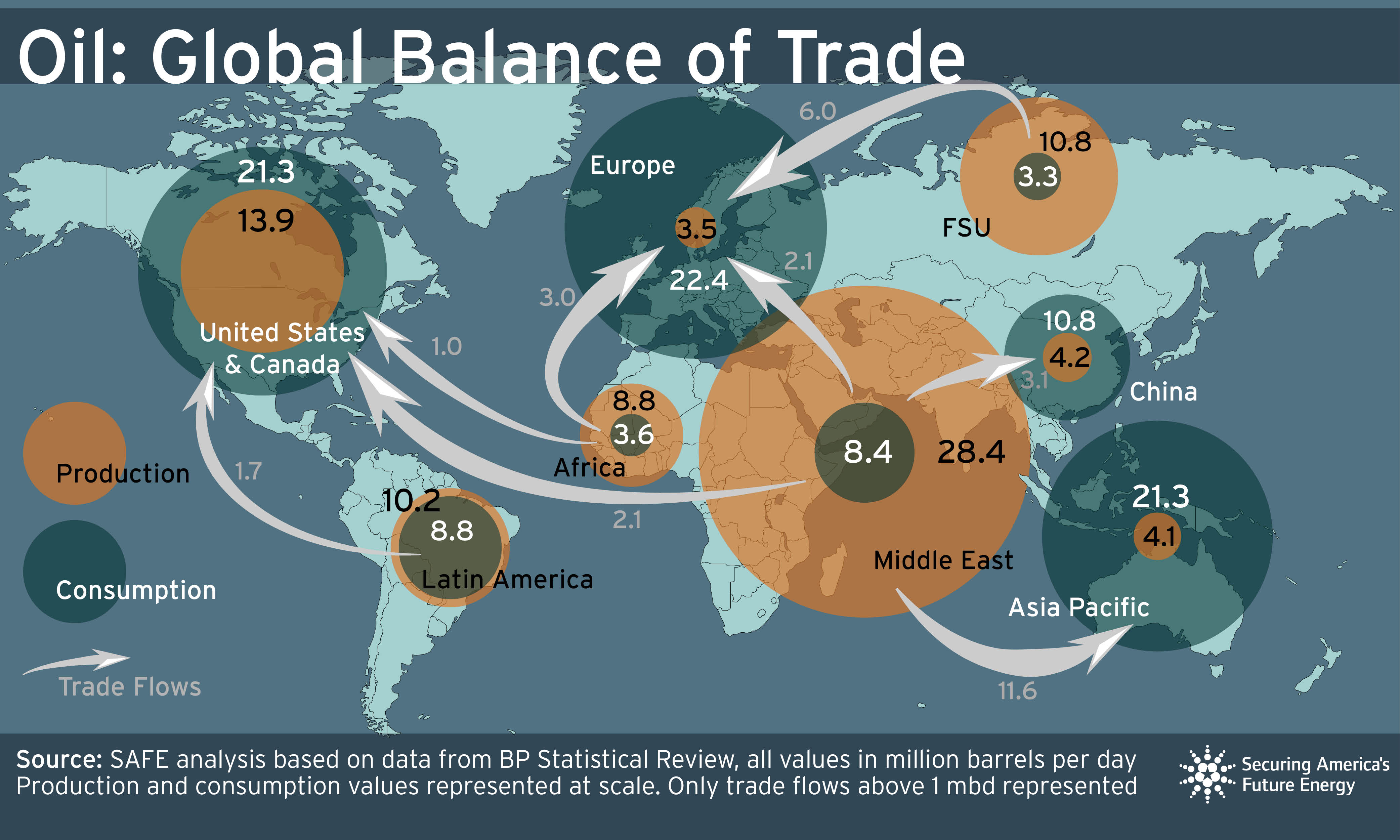

We gathered data from the National Highway Traffic Safety Administration (NHTSA) on the historical rate for crashes of different severities. We performed statistical analysis to determine how yearly trends in fatal, injury, and property crashes relate to each other. We found near-complete overlap – see below image for visual representation.

The general trend is that the number of fatal, injury, and property accidents track each other closely. This strongly suggests that the underlying cause of all crashes mostly overlap. This would mean that fatal crashes are, largely, more severe versions of injury and property crashes and not sui generis events that need to be addressed separately.

Common Crash Causes

A second major data point is from crash data in New York City. The city posts a summary of every police accident report online, a very rich source of data. We analyzed the period from May 2014 to January 2019. This set contained more than 700,000 crashes, with 745 fatal and over 140,000 severe enough to cause injuries, with the remainder causing property damage only. The police report indicated the primary cause for each crash.

Since the NYPD attributes crashes to one of 56 different causes, it is possible to test our thesis that the same underlying reasons contribute to fatal and non-fatal crashes. If that is the case, we would see a fairly similar breakdown of causes across all crash severities (fatal, injury, property).

Most crashes – for all category types – were distributed across a relatively small number of crash reasons. We created “radar” charts to illustrate whether the different types of crashes are caused by similar conditions.

The results show very high overlap between crash causes. There are some categories that show differences (for example, “failure to yield” is a more common cause of a fatal or injury crash, while “backing unsafely” is more common as a cause for property crashes).

We also performed an overlap analysis of the common causes and found that the distribution of causes is very similar for the different crash severities.

Why Does This Matter?

We’ve built support for the thesis that fatal collisions are not categorically different than less severe ones, but instead arise from the same human errors that produces collisions that cause injuries and property damage.

It is worth noting that these – and most other primary causes used to explain crashes – are incidences of human error. In New York City, similar types of driving mistakes produce crashes with different damage outcomes, and these mistakes are endemic to human driving. Similar types of mistakes producing varied crash outcomes suggests that driver success in avoiding minor crashes is correlated with avoiding more severe collisions as well.

What does this mean for AV safety assurance? It suggests that a common set of behavioral and external factors explain almost all crashes, whether more or less severe. We already understand this to be true for human drivers and use this insight to assess driver safety based on observing their behavior for relatively short periods a time – that is why your insurance rates go up if you get into a minor accident, and is the basic principle behind why we can assess drivers on a twenty minute driving test instead of waiting to see if they crash more often than once every 500,000 miles or so.

Application to AVs

In the end, what does our analysis tell us about any possible “shortcuts” to understanding or measuring the safety of AVs? The general principle we have extracted, that the ability to avoid less severe crashes is strongly correlated with the ability to avoid more severe crashes, tells us that we don’t need to observe AVs for a distance long enough to assure us that they are less likely to get into fatal crashes than human drivers, but that observing their general likelihood to avoid crashes will be strongly indicative of their ability to avoid crashes in general. We anticipate two important questions:

- Perhaps we can extrapolate whether AVs are safe based on their performance relative to humans using all crashes – but this will still take millions of miles, and miles may need to be re-run every time there is a software update. Additionally, a statistically significant sample will need to be tailored for each AV use case (e.g. city roads, rain, night) or “operating design domain,” each requiring millions of miles. So is there any practical implication to the argument we’ve made to benchmark against the rate of all crashes?

- This analysis relies on data from drivers. Perhaps only humans “fail” in a pattern where minor failures are predictive of their major ones. Perhaps AV failures will follow a different pattern where severe failures (e.g. fatalities) are more common relative to small failures (this is essentially Professor Koopman’s argument). Or AVs interacting with human drivers will fail in different ways altogether? How do we know that observing AVs over smaller samples can help us extrapolate to how AVs will perform in the real world?

These questions are linked by the common theme of “how do we know when small samples are indicative of larger ones,” which we will examine in an upcoming blog post. One final, thought-provoking analysis we’d like to share with you is a quick response to the second question. There is little public data on AV failures; the best source of data, for all its flaws, is California’s collection of disengagements and AV accident reports.

SAFE analyzed all 131 AV accident reports as of January 2019 and zeroed in on the 80 crashes occurring with an AV in autonomous mode and not resulting from an act of vandalism. When plotting the crashes by degree of severity (fatality, injury, or property damage only), we found a familiar pattern – a distribution where less severe crashes are considerably common than more severe ones – and “disengagements” are considerably more common than any crash. This is the best publicly available evidence that AV crashes are similar in distribution to human crashes, which implies that major AV crashes may be correlated with and predicted by minor AV crashes. This will be a jumping off point for our further analysis in another post to come soon.